“How do we measure how our brand is showing up inside of LLMs?” <— I get this question almost weekly at this point. My answer isn’t complicated.

Through a mix of understanding your customer’s needs, using AI brand visibility monitoring software, and a little elbow grease.

Just kidding about the elbow grease…

Also kidding about it being that simple. It’s way more nuanced than that. That’s why I’m writing this.

You’re not in the dark when it comes to replacing outdated keyword tracking tools and gaining insight into where you stand.

These tools give you the data to make educated guesses about how and where you’re showing up inside Generative AI environments.

Before I dive into the actual tools and tracking methodology, it’s important to understand that while these tools are useful, they have limitations.

Such as:

- Every AI model is built differently and produces different results

- Every single user, device, and location affects the outcome

- AI models update their results constantly, sometimes hourly

- The models are changing every single day

The real benefit of these tools is that they give you directionally useful data points.

You can act on that info and combine it with everything else you’ve got like GA4, Microsoft Clarity, your CRM, and direct customer feedback to build a GEO strategy that actually works.

That being said, for the impatient among you, here’s what you came here for:

Top 5 AI Brand Visibility Monitoring Tools TL;DR

- Scrunch – Best For Proactive AI Optimization

- Peec AI – Best For Actionable Insights

- Profound – Best For Enterprise SEO Teams

- Hall – Best For Beginners

- Otterly.AI – Best For Startups

Got it?

Okay, For the rest of you, let’s get to the real meat and potatoes so you can use these tools effectively.

Starting with a brief definition to ground us.

My body is ready.

What is AI Brand Visibility Monitoring?

AI Brand Visibility Monitoring uses structured prompts across large language models to track brand mentions.

It identifies where a brand appears, how often, and in what context, enabling companies to monitor digital presence, uncover opportunities, and detect misrepresentation or competitive overlap in AI-generated content.

It’s the modern version of keyword rank tracking, but across AI systems instead of search engines.

This gives you real-time insight into brand presence, competitive positioning, and where you need to improve.

What Is AI Brand Visibility Monitoring Software?

AI brand visibility monitoring software is a category of tools that use structured prompts with large language models to track brand mentions.

They show where your brand appears, how often, and how it compares to competitors. These tools provide data for visibility analysis, brand positioning, and competitive benchmarking.

These tools surface which content types and third-party sources LLMs reference most, and which competitors are winning in specific scenarios.

They’re not flawless, but the trends over time give you a clear direction for how to adjust your strategy as the AI ecosystem evolves.

How do you measure how your brand shows up inside LLMs?

It’s not magic.

It’s just structured curiosity and a bit of discipline.

Here’s the step-by-step process I use and recommend if you actually want to understand your visibility inside language models without guessing.

Step 1: Talk to your customers. Seriously.

Most companies think they know their customers because they have a Notion doc with a persona named “B2B Brian” who likes cold brew and hates friction. That’s not what we’re doing here.

If you want to understand how your audience would naturally interact with a language model, you need to understand how they think, what they struggle with, and how they actually talk.

How to gather this info:

- Surveys: Use Typeform or Google Forms. Keep it simple. 5 to 7 questions max. Incentivize with something decent—a gift card, free resource, early access. Don’t make it feel like a chore.

- Customer interviews: Use tools like Calendly for scheduling and Zoom or Loom for recording. Aim for 10 to 15 customers across different roles and maturity levels. Use Rev or Otter.ai to transcribe everything automatically.

- Email outreach: Write a casual email to 20 recent buyers or active users. Subject line: “Can I ask you a weird favor?” Works more often than you’d think.

What to ask:

- “What were you trying to solve when you found us?”

- “What were you searching for before landing here?”

- “Which tools, companies, or content helped along the way?”

- “What was confusing or frustrating during that journey?”

- “How would you describe what we do to a colleague?”

Good vs bad execution:

- Bad survey:

- “On a scale of 1 to 10, how likely are you to recommend us to a friend?”

That’s nice for a board meeting. Useless for prompt building.

- “On a scale of 1 to 10, how likely are you to recommend us to a friend?”

- Good survey:

- “What would you type into Google or ChatGPT if you were looking for a solution like ours?”

That’s the gold. It gets you the raw language they actually use.

- “What would you type into Google or ChatGPT if you were looking for a solution like ours?”

- Bad interview:

- Talking 80% of the time, pitching your roadmap, and asking if they “like the new dashboard.”

- Good interview:

- You say five words total. The customer does the rest. You ask open-ended questions and listen for the real stuff: emotional language, moments of confusion, what they thought you were before they learned what you are.

What to look for in the answers:

- Repeated phrases and mental models (like “I was just trying to figure out why X wasn’t working”)

- Competitors or categories they referenced before finding you

- What “trigger” got them to search in the first place

You’re building your prompt dataset from their words, not yours. That’s how you stop guessing and start reverse-engineering actual buyer intent inside AI models.

Step 2: Audit your existing insight goldmine

You already have 80% of what you need. It’s just buried in tools nobody checks and calls nobody replays.

This is the part where you stop assuming what your audience cares about and start pulling receipts from actual buyer behavior.

How to gather this info:

CRM Mining

Go into HubSpot, Salesforce, Close, whatever you use. Pull a list of:

- Deals closed in the last 6 months

- Deals lost with detailed notes

- Any deal tagged with “stuck,” “on hold,” or “not ready”

Export that into a spreadsheet

- Look at deal notes, contact activities, and reasons listed in your stages.

- You’ll start to see patterns like “lack of internal buy-in” or “not sure if this fits our use case” show up.

Call Recording Tools

If you’re using Gong, Chorus, or even Zoom recordings, this is your gold mine. Search for common phrases like:

- “We’re currently using…”

- “We were looking for…”

- “What’s the difference between you and…”

- “Our biggest pain point is…”

Then listen. You’ll hear your buyers’ thought process in real time, and that gives you killer raw material for prompt creation.

Website Behavior Tools

Use GA4 to check your top 10 most visited blog posts, landing pages, and help docs. Use Microsoft Clarity or Hotjar to see how far users scroll and where they rage click.

Look especially for:

- High-traffic pages with low conversions (possible mismatch between what they want vs what you’re giving them)

- Pages where people spend 4+ minutes (they care deeply about that topic)

Good vs bad execution:

- Bad CRM audit:

Looking at only pipeline velocity or win rates. That tells you what happened, not why. - Good CRM audit:

Reading the notes. Looking for actual language salespeople entered. Phrases like “They compared us to Competitor X” or “Didn’t see value in Feature Y” are prompt-building gold. - Bad call review:

Listening to one random call while eating lunch and zoning out after 3 minutes. - Good call review:

Searching for the most frequently closed-won deals, tagging questions that came up 2 or 3 times, and noting exact wording. Bonus if you can pull a list of common objections or phrases people use to describe their problem. - Bad GA4 usage:

Looking at bounce rate as if it still matters in 2025. - Good GA4 usage:

Sorting by highest user engagement time and exit rate. Finding what people linger on and where they drop off. Those are your top-of-funnel and middle-of-funnel clues.

What you’re trying to figure out:

- What content people care about most

- Which pain points show up again and again

- What language they’re using to describe their needs

- Where competitors or other third-party platforms show up in the process

You’re not just collecting data. You’re mapping the exact questions real buyers are typing into AI models. And trust me, they are.

Step 3: Build a prompt list based on the buyer journey

Now that you’ve got customer language and internal data, it’s time to turn it into prompts. Not fluffy “ChatGPT please write a summary” stuff. Real prompts that simulate how buyers search for help, evaluate solutions, and make decisions.

How to do it the right way:

Use the classic funnel model as your backbone. Top. Middle. Bottom.

If that feels too B2B-marketer-core for you, you can go with the Problem Awareness scale:

- Problem Unaware

- Problem Aware

- Solution Aware

- Decision Ready

Your goal is to create prompts that match what a real customer would ask a language model at each stage.

Prompt Examples by Stage:

- Top of Funnel / Problem Unaware:

- “Why is my churn rate going up even after product updates?”

- “How do I know if my team is spending too much time on lead scoring?”

- Middle of Funnel / Problem Aware:

- “How to reduce churn in a SaaS company”

- “What are some common issues with lead scoring in RevOps?”

- Solution Aware:

- “Customer success platforms compared”

- “Best lead scoring tools for B2B SaaS in 2025”

- Decision Ready / Bottom of Funnel:

- “Gainsight vs Catalyst for customer success”

- “Top AI tools for lead scoring in B2B SaaS”

- “Should I choose [Your Brand] or [Competitor] for retention analytics?”

How to generate these prompts efficiently:

- Take the raw language from Steps 1 and 2 and drop it into ChatGPT. Prompt it like this:

“Based on the following customer quotes, generate 10 realistic prompts someone might ask when searching for a solution like ours. Use their tone, not a marketer’s.” Then paste in a list of quotes or pain points you collected. - Use Perplexity or Claude to see how current prompts are answered. See which formats get the most specific and relevant results, then model yours after those.

- Keep your prompt phrasing natural. If you wouldn’t ask a coworker the question that way, don’t use it.

Good vs bad execution:

- Bad prompt creation:

- “Best SaaS company with innovative AI solutions”

No one talks like that unless they’re writing a press release.

- “Best SaaS company with innovative AI solutions”

- Good prompt creation:

- “Which AI customer support tools help reduce tickets without hiring more reps?”

That’s a real thought someone has while sipping coffee and trying to meet a KPI.

- “Which AI customer support tools help reduce tickets without hiring more reps?”

- Bad coverage:

- 20 prompts about features and nothing else. That tells you what your product does, not how people decide to buy it.

- 20 prompts about features and nothing else. That tells you what your product does, not how people decide to buy it.

- Good coverage:

- 25 prompts at the top, 35 in the middle, 40 at the bottom. Reflecting what people actually search across the full journey.

- 25 prompts at the top, 35 in the middle, 40 at the bottom. Reflecting what people actually search across the full journey.

What to avoid:

- Marketing speak or jargon that would never show up in a casual search

- Prompts that only highlight your product

- Over-engineered prompts with too much context. Let the model simulate the uncertainty of a real buyer

You’re not trying to trick the model. You’re trying to mirror what your customer would actually ask when they’re confused, skeptical, or actively comparing options.

Once you’ve got 100 prompts, you’ve got a foundation. That’s your new rank tracking system.

Step 4: Choose your test set

You’ve got your prompt list. Now it’s time to narrow it down to the most useful 100. Not the ones that sound cool. Not the ones that make your product look amazing.

The ones that actually represent how buyers move through their decision-making process. This isn’t about being comprehensive. It’s about being strategic.

How to do it:

- Create a spreadsheet or table. Columns should include:

- Prompt

- Funnel stage (TOFU, MOFU, BOFU)

- Intent (Informational, Navigational, Transactional)

- Target persona (if you’ve got multiple ICPs)

- Competitor mentions (if any)

- Source type expected (e.g., blog post, product page, Reddit thread)

- Score each prompt. Use a simple 1 to 3 scale based on:

- Clarity: Does the prompt clearly reflect a real buyer question?

- Coverage: Does it help you understand a specific stage of the journey?

- Relevance: Does it match what your solution is designed to solve?

- If a prompt scores 1s across the board, delete it. Nobody’s asking that.

- Balance your stages. Aim for a mix like:

- 30 TOFU (awareness and pain-seeking)

- 40 MOFU (solution research and exploration)

- 30 BOFU (ready to buy, comparing options)

- You don’t need to be strict, just avoid 90 prompts at the bottom of the funnel and 2 at the top.

- That’s how you build a biased dataset that tells you nothing useful.

- That’s how you build a biased dataset that tells you nothing useful.

- Stress test your set. Share it with one teammate or stakeholder. Ask:

- “If this were the only list of prompts we tracked for the next quarter, would it tell us anything useful about where we stand in LLMs?”

- If the answer’s no, go back and swap out fluff for signal.

Good vs bad execution:

- Bad test set:

100 prompts that are basically the same sentence with slight variations- “Best AI writing software”

- “Top AI writing tools”

- “AI writing software comparison”

Cool. You just ran the same query three times and wasted two-thirds of your testing.

- “Best AI writing software”

- Good test set. A mix like:

- “How do I reduce time spent on customer support without hiring?”

- “Best AI tools for support ticket deflection”

- “Zendesk vs Intercom vs Forethought”

- “Are AI chatbots accurate enough for healthcare?”

That’s range. That’s insight.

Name your prompt groups something that matches your mental model. If you think in funnels, great. If you think in pain points or “Jobs To Be Done,” even better. Just keep it consistent so you can actually compare data later.

You’re building a benchmark here. A clean, well-structured test set is the foundation for everything that follows. Treat it like your GPT analytics dashboard before GPT analytics dashboards exist.

Step 5: Run these prompts across multiple models (to find your actual competitors)

Forget what your sales deck says about your competitive set. LLMs don’t care what market category you think you’re in. They care what content exists, what sources are cited, and what answers feel most relevant to the prompt.

This is where you use your prompt set to figure out who the models believe your competitors are.

1. Choose your models.

Start with the big four that people are actually using:

- ChatGPT (GPT-4.5)

- Claude

- Gemini

- Perplexity

Optional bonus: try Meta’s Llama, Bing Copilot, or search engine LLMs if you’re feeling spicy.

2. Run your prompts manually or via software:

You can do this manually if you’ve only got 20 to 30 prompts. If you’re testing 100+, use tools like:

- Pliant or Clearbit’s new LLM monitoring

- A custom GPT bot with API access

- Manual spreadsheet input plus Loom recordings for faster reviewing later

3. For each prompt, record:

- Which brands are mentioned

- Where in the response they appear (top, middle, footnote)

- What is said about them (are they positioned as leaders, alternatives, comparisons?)

- Any direct competitor matchups (X vs Y)

4. Look for frequency and patterns:

- Which names show up again and again?

- Are the same few players dominating across different prompts and models?

- Does one model favor a different competitor than another?

- Are there any “unknown” players that you weren’t even tracking?

Good vs bad execution:

- Bad: You type in a bunch of prompts, get excited your brand showed up once, then close the laptop and go to lunch.

- Good: You discover that in 65% of “solution aware” prompts, your competitor’s blog is being cited in Perplexity responses, while Gemini tends to favor large analyst sources. You document that. Now you know what types of content are earning them visibility and where.

This is how you find your “AI-surfaced competitors”—the ones your buyers might see before they even know you exist. And spoiler alert: they’re not always the folks you’ve been pitching against.

Track the names, the citations, and the tone. That’s your new map.

Step 6: Plug into your AI monitoring tool of choice

Now that you’ve got your prompts dialed in and you’ve seen who’s showing up manually, it’s time to scale the process.

You don’t need to copy-paste 100 prompts into 4 different chatbots every Monday for the rest of your life. That’s what monitoring tools are for.

These platforms automate the prompt testing, track model responses over time, and surface trends that matter.

Here’s how to do it right:

- Upload or import your prompt set

Most tools let you do this via spreadsheet, API, or just a bulk entry field. Make sure to label your prompts by stage or intent if possible. - Choose your frequency

Weekly runs are ideal. Daily will show too much noise, and monthly is too slow to spot shifts in time. LLM results change fast. - Let the data collect for at least 3 to 4 weeks

You’re not optimizing yet. You’re building your baseline—a snapshot of where your brand, competitors, and relevant content sources stand across the AI ecosystem. - Check your coverage

Does the tool track the models you care about? You want visibility across ChatGPT, Gemini, Claude, Perplexity, and others. Don’t settle for a single-model snapshot.

What to look for in your dashboard:

- Brand mention frequency across prompts and models

- Competitive visibility: who’s winning in each journey stage

- Source types: blog posts, review sites, help docs, etc.

- Positioning: are you a top mention or buried in the “alternatives” section?

- Gaps: prompts where you should appear but don’t

Good vs bad execution:

- Bad:

You upload prompts, skim a few dashboards, then move on because your name popped up once in ChatGPT and it felt validating. - Good:

You log trends weekly, note inconsistencies, and start to see where the same three competitors are dominating the answers. You identify prompts where you have the right to win but aren’t showing up—and make a plan to change that.

Treat your first four weeks like a visibility audit. Export everything. Annotate key prompts. Write a short internal summary of what you’re seeing and where you’re missing. This becomes your baseline to compare future optimization against.

You can’t make smart moves without knowing the starting line. This is how you find it.

Step 7: Analyze the trends, not the noise

This is where everything you’ve set up finally starts to pay off. But only if you know what to look at.

You’re not here to panic when your brand drops off one Gemini result on a Tuesday. That’s noise. You’re here to watch the momentum, spot the patterns, and build a plan that moves the needle over time.

Here’s how to analyze the data like someone who knows what they’re doing:

1. Identify consistent competitors

Which brands are showing up across multiple models, prompts, and weeks? These aren’t random results. These are your real LLM competitors—regardless of whether they’re in your current sales battle cards.

2. Study the sources

Which content is getting cited over and over again? Blog posts? Community forums? Product comparison pages? These are your new SEO targets. If models are pulling from them, your content should be there—or better.

3. Look at content format, not just topic

Are video transcripts showing up? PDFs? Long-form blog guides? Are your competitors’ docs ranking because they answer specific use cases better than your slick homepage ever could? Now you know what to build.

4. Track shifts across time

Once you have 3 to 4 weeks of data, start mapping:

- Where your brand shows up consistently

- Where it disappeared

- Where a competitor suddenly broke in

That’s the moment you figure out what changed. Did they publish a new guide? Launch on ProductHunt? Win a case study backlink from G2? Reverse-engineer it.

5. Prioritize action, not admiration

Seeing your brand show up is cool. But if it’s showing up in one low-impact prompt once a month, who cares? Focus on the high-intent, frequently cited prompts where you’re missing. That’s where revenue lives.

Good vs bad execution:

- Bad:

You scroll through graphs, feel vaguely proud, send a screenshot to your CEO, then forget to do anything with it. - Good:

You flag the top 10 prompts where your competitors are dominating. You identify the content formats being cited. You turn that into a roadmap for what to build, update, or promote next quarter.

This isn’t a one-time audit. This is now part of your ongoing content and SEO strategy. Language models are already shaping how your customers discover, compare, and trust brands. Ignoring it doesn’t make it go away.

Watching it gives you the edge. Acting on it makes you unmissable.

Best AI (LLM) Brand Visibility Monitoring Tools In 2025

As LLMs become the new front door to product discovery, tracking how your brand shows up in AI-generated answers isn’t optional. It’s part of your strategy now.

Below are the top tools in 2025 helping marketers monitor, analyze, and improve their brand’s visibility inside AI responses across ChatGPT, Perplexity, Gemini, and more.

1. Scrunch AI – Best For Proactive AI Optimization

Scrunch AI helps brands monitor and optimize how they appear in AI-generated search results across platforms like ChatGPT, Perplexity, and Google’s AI overviews. More than just visibility tracking, Scrunch identifies content gaps, misinformation, and outdated info that may be hurting your AI presence—and then shows you how to fix it. It’s a favorite among enterprise marketing teams who want to actively shape their AI discoverability, not just observe it.

Lowest tier pricing: $300/month

Free tier?: No

Key Features:

- AI Search Monitoring – track brand mentions, share-of-voice, and sentiment in AI results

- Knowledge Hub – detect content gaps or incorrect AI-generated info about your brand

- Insights & Recommendations – content improvements to increase AI visibility

- Journey Mapping – visualize how AI interprets customer journeys involving your brand

- AI Optimization Tools – structure and rewrite content for better AI model interpretation

Year created: 2023

Founder: Chris Andrew (CEO), Robert MacCloy (CTO)

Company URL: https://scrunchai.com

LinkedIn URL:https://www.linkedin.com/company/scrunchai

Average Review Rating: 5.0/5 (G2, based on ~10 reviews)

Real User Feedback:

- “Scrunch AI has been instrumental in helping us stay ahead of the curve as AI reshapes ecommerce and search… It provides strategic depth – not just surfacing keywords, but showing how AI models interpret our brand and content.”

- “Still evolving – some features are still rolling out – but the pace of iteration is fast and the team is very receptive. Everything we’ve needed has either been addressed or is on the roadmap.”

2. Peec AI – Best For Clear Insights

Peec AI is a Berlin-based platform designed to help marketing teams track, benchmark, and improve brand visibility across major generative AI engines like ChatGPT, Gemini, Claude, and Perplexity. It delivers real-time analytics on brand mentions, third-party citations, and competitor performance inside AI-generated content. What sets Peec apart is its combination of multi-model data with prompt-level insights, making it a tactical ally for teams who want to act quickly on AI visibility gaps. It’s especially useful for those looking to turn generative search into a measurable growth channel.

Lowest tier pricing: €89/month (≈$95 USD)

Free tier?: No, but a 14-day free trial is available

Key Features:

- Brand Visibility Tracking – monitor brand mentions and citations across AI tools

- Competitor Benchmarking – compare your brand’s AI visibility vs competitors

- Source Analysis – identify which third-party sites are driving citations in AI answers

- Prompt/Query Analytics – track which prompts surface your brand, and how often

- Trend & Alert System – monitor visibility shifts and receive alerts on major changes

Year created: 2025

Founder: Marius Meiners (CEO), Tobias Siwona (Co-founder/CTO), and Daniel Drabo (CRO)

Company URL:https://peec.ai

LinkedIn URL:https://www.linkedin.com/company/peec-ai

Average Review Rating: 5.0/5 (Slashdot, early reviews)

Real User Feedback:

- “The Best Tool to Track AI Chat Visibility.” – Slashdot reviewer

- “PeecAI – solid option. Founder was great on the call, most of what we needed. They move fast. Price made sense.” – Reddit, marketing lead

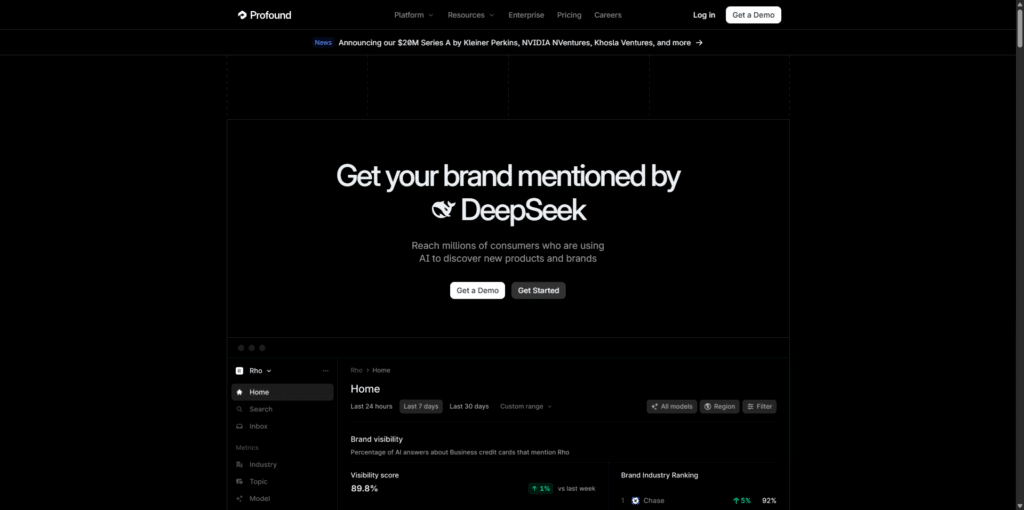

3. Profound – Best For Enterprise SEO Teams

Profound is a premium AI search analytics platform purpose-built for large marketing teams who need deep, accurate visibility into how their brand shows up across generative AI platforms like ChatGPT, Perplexity, Gemini, and Claude. Designed to crack open the “black box” of AI-driven recommendations, Profound gives you data on brand mentions, sentiment, and citations, all mapped to real prompts and queries. It’s trusted by enterprise players like MongoDB and Indeed, and offers robust GEO (Generative Engine Optimization) tooling with advanced source tracking and alerting.

Lowest tier pricing: $499/month (“Profound Lite”)

Free tier?: No, but free demo or trial available upon request

Key Features:

- Answer Engine Insights – dashboards with brand mentions, sentiment, and share-of-voice

- Conversation Explorer – logs real AI user prompts and resulting brand citations

- Citation & Source Tracking – shows which websites/models are referencing your brand

- AI Crawler Analytics – tracks AI agent crawl activity on your site

- Alerts & Recommendations – content improvement suggestions based on data gaps

Year created: 2024

Founder: James Cadwallader (CEO), Dylan Babbs

Company URL:https://tryprofound.com

LinkedIn URL:https://www.linkedin.com/company/tryprofound

Average Review Rating: 4.7/5 (G2, ~56 reviews)

Real User Feedback:

- “Profound has everything – a full feature set and top-tier support – but it comes at a premium price. Conversation Explorer alone is worth it.”

- “Setting up some advanced tracking features wasn’t intuitive at first, but overall Profound has been a lifesaver. The dashboard makes reporting to management painless, and integration was smooth.”

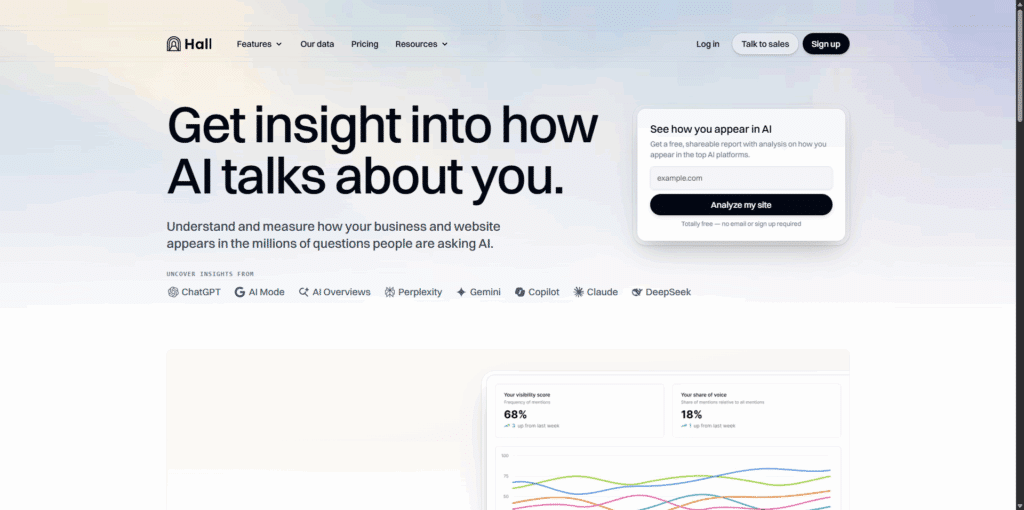

4. Hall – Best For Beginners

Hall is a self-serve AI visibility platform built for marketers who want to understand how their brand shows up across generative AI tools—without needing an enterprise budget or a data analyst on standby. Launched in 2023 and based in Sydney, Hall makes it easy to track brand mentions, page-level citations, AI agent crawl behavior, and even product recommendations inside AI chats. With a generous free plan and real-time dashboards, it’s perfect for small teams or individuals who want to get started quickly and learn fast.

Lowest tier pricing: $199/month (Starter, billed annually)

Free tier?: Yes – Lite plan includes 1 project, 25 tracked prompts, weekly updates

Key Features:

- Generative Answer Insights – track brand mentions, sentiment, and share-of-voice in AI responses

- Website Citation Insights – see which of your pages are being cited in AI-generated content

- Agent Analytics – monitor which AI crawlers are visiting your site and why

- Conversational Commerce – track how your products are recommended in chat-based shopping queries

- Free AI Visibility Report – instant visibility scan with no signup required

Year created: 2023

Founder: Kai Forsyth (CEO & Founder)

Company URL:https://usehall.com

LinkedIn URL:https://www.linkedin.com/company/usehall

Average Review Rating: 5.0/5 (G2, 2 reviews)

Real User Feedback:

“We quickly understood the exact queries driving referrals from ChatGPT, allowing us to refocus content and drive more leads.” – George Howes, co-founder of MagicBrief

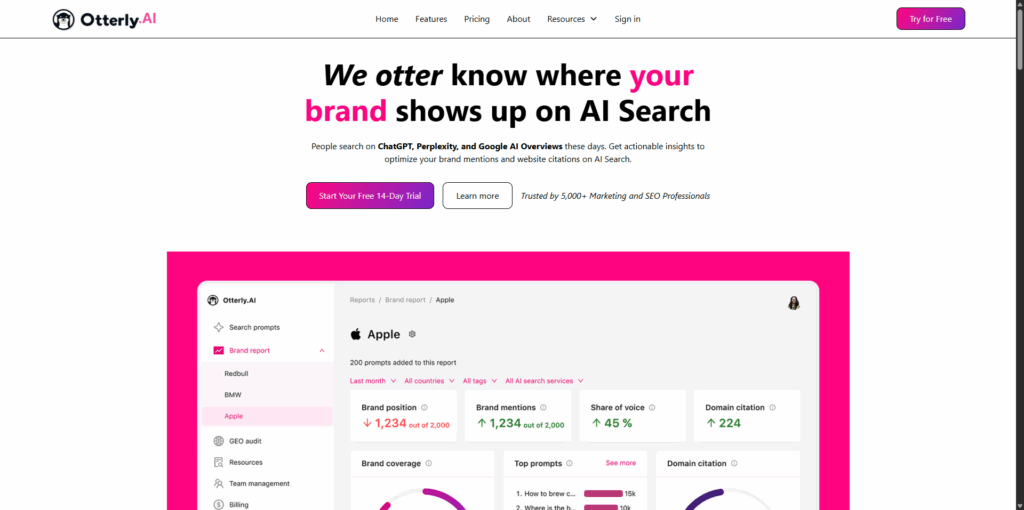

5. Otterly.AI – Best For Solopreneurs

Otterly.AI is a lightweight, affordable AI search monitoring tool built for marketers who want to track brand visibility across platforms like ChatGPT, Perplexity, and Google AI Overviews—without needing a giant budget or technical team. Launched in 2023 by a group of seasoned SaaS founders, Otterly focuses on prompt-level insights and practical reporting. Its real-time tracking, intuitive interface, and deep integration with SEO workflows make it an ideal choice for startups looking to understand how AI agents interpret and reference their brand online.

Lowest tier pricing: $29/month (“Lite” plan)

Free tier?: No, but free trials are available

Key Features:

- Multi-Platform AI Monitoring – track mentions across ChatGPT, Perplexity, Google AI Search, and Gemini (beta)

- Prompt & Keyword Tracking – monitor how specific queries surface your brand, and in what context

- Alerts & Reporting – get notified when your brand is mentioned or missing from AI responses

- Sentiment and Context Analysis – see how your brand is framed in AI answers and what topics drive mentions

- Integrations – connects with Slack, Google Sheets, and Semrush for seamless reporting and workflows

Year created: 2023

Founder: Thomas Peham (CEO), Josef Trauner, Klaus-M. Schremser

Company URL:https://otterly.ai

LinkedIn URL:https://www.linkedin.com/company/otterly-ai

Average Review Rating: 5.0/5 (G2, ~12 reviews)

Real User Feedback:

- “My team has been using Otterly.ai for a while, and it’s quickly become an essential part of our stack… The platform is simple to use, the data actionable, and the team behind it is super responsive.”

- “Unlike anything else on the market – Otterly has completely transformed how we approach SEO… The dashboard is intuitive, and AI mention alerts let us catch trends before they peak.”

Get a Free AI Brand Visibility and GEO Audit

We’ll show you exactly where your brand stands and what to do next to win in both SEO and AI-powered search.